We know what AI is but what is AGI and ASI?

AI refers to machines performing tasks that typically require human intelligence. AGI is AI with general, human‑level cognitive abilities across a wide range of tasks. ASI is a hypothetical AI that surpasses human intelligence in virtually all domains.

Overview

- AI or ANI (Artificial Narrow Intelligence): specialized AI that excels at specific tasks (e.g., image recognition, playing chess, language translation). It’s the most common form in use today.

- AGI (Artificial General Intelligence): systems with broad, human‑level capabilities—understanding, learning, reasoning, and applying knowledge across many domains.

- ASI (Artificial Superintelligence): a level of intelligence that greatly exceeds human capabilities in all areas.

Scope of tasks

- ANI: narrow scope, task-specific

- AGI: wide, adaptable reasoning across domains

- ASI: superior performance in everything, including creativity and problem‑solving

- Learning and adaptability:

- ANI: learns within fixed parameters and datasets

- AGI: can learn from diverse experiences and transfer knowledge

- ASI: continuously self‑improves beyond human constraints

- Current state and timelines

- ANI is ubiquitous today, powering search, assistants, recommendations, and more.

- AGI remains aspirational; estimates vary widely among researchers, with no consensus on when or even if it will be achieved.

- ASI is speculative science fiction at present; most experts agree it would require breakthroughs beyond AGI.

- Potential implications

- Economic and labor impacts: automation of complex tasks could shift job roles and demand new skills.

- Safety and governance: AGI/ASI would raise significant ethical, safety, and governance questions, including alignment with human values.

- Research and science: AGI could accelerate discovery across fields, from medicine to physics.

- Common misconceptions

- AGI does not imply immediate, conscious machines with emotions; it implies broad cognitive capabilities.

- ASI does not mean instant, uncontrollable intelligent beings; it depends on many speculative breakthroughs and safety frameworks.

Summary

- ANI: specialized AI for specific tasks

- AGI: human‑level general intelligence across tasks

- ASI: intelligence far surpassing human capabilities

How do AGI and ASI differ in capabilities

AGI is defined as AI that can match human-level intelligence across many domains, while ASI is a hypothetical future AI that would far surpass the best human minds in virtually all areas of cognition. Both are more capable than today’s narrow AI, but ASI adds superhuman scale, speed, and depth along with the ability to improve itself far beyond human limits.softbank+3

Core capability difference

- AGI: Human-level performance on most intellectual tasks, including learning, reasoning, planning, and adapting across domains, similar to a broadly educated person.wikipedia+1

- ASI: Superhuman performance in essentially every intellectual task, including science, strategy, creativity, and long-term planning, not just faster computation.moontechnolabs+2

In short, AGI aims to do what humans can do; ASI aims to do far more than humans can do, in both breadth and depth.netguru+1

Learning and self‑improvement

- AGI: Can learn from diverse data and experiences, transfer knowledge between domains, and adapt to new tasks, but its self‑improvement is still constrained by design and human oversight.softbank+2

- ASI: Typically defined as recursively self‑improving—able to redesign its own algorithms, generate its own training data, and continually increase its capabilities without direct human guidance.creolestudios+2

This recursive self‑improvement is a key reason ASI is often linked to the “intelligence explosion” or technological singularity.netguru+1

Scope and problem‑solving

- AGI: Expected to handle any task a human knowledge worker could, from scientific research to teaching, software engineering, or policy analysis, with strong but roughly human‑comparable judgment.kanerika+2

- ASI: Would solve problems beyond human comprehension, discover patterns humans cannot see, and generate new scientific theories or technologies at a pace and sophistication that humans could not match.moontechnolabs+2

Where AGI might collaborate with humans as a peer, ASI would function more like an extremely powerful, alien-level expert.

Table: AGI vs ASI capabilities

| Aspect | AGI (General) | ASI (Superintelligence) |

|---|---|---|

| Intelligence level | Roughly human-level across many domains.wikipedia+2 | Vastly beyond the best human experts in all domains.softbank+2 |

| Learning style | Learns and generalizes across tasks like a human.softbank+2 | Creates its own learning strategies and data; recursively self‑improving.softbank+1 |

| Problem‑solving | Solves most tasks humans can, with high flexibility.moontechnolabs+1 | Cracks problems humans cannot even fully specify or understand.softbank+2 |

| Speed and scale | Similar cognitive range to humans, but faster on some tasks.moontechnolabs | Operates at machine speed on global, massive‑scale data streams.moontechnolabs+1 |

| Novel discovery | Can assist and sometimes lead human‑level research.moontechnolabs+1 | Drives radical breakthroughs in science, technology, and strategy autonomously.moontechnolabs+1 |

| Self‑modification | Limited or controlled self‑improvement.softbank+1 | Open‑ended self‑modification and optimization of its own code and goals (in many visions).softbank+2 |

Status today

- AGI: Still not achieved; current systems are closer to advanced narrow or “weak” forms of generality, and AGI remains an aspirational research target.viso+2

- ASI: Entirely speculative at present, dependent on first reaching robust AGI and then enabling powerful recursive self‑improvement.kanerika+2

If you want to go deeper, the next useful dimension is risk and governance differences: why AGI raises big alignment questions, and why ASI raises civilization‑level control questions.

What are real world uses of AGI today

There are no widely accepted, deployed AGI systems in the strict “human‑level general intelligence” sense today; what exists are advanced narrow or “proto‑AGI” models being used in many real‑world applications. Most concrete use cases branded as “AGI” are actually powerful generative or multimodal AI applied across multiple tasks rather than true general intelligence.sidetool+3

Important clarification

- No consensus that AGI exists yet: Surveys and 2025 overviews still describe AGI as a future milestone that requires breakthroughs in general problem‑solving and knowledge transfer.kanerika+1

- Marketing vs reality: Many articles and vendors use “AGI” aspirationally for advanced models, but technical write‑ups usually treat them as steps toward AGI, not fully general minds.techrevolt+2

So “real‑world uses of AGI” today are better described as uses of advanced AI that show some generality but remain below true AGI.

Advanced AI uses often framed as AGI‑like

- Autonomous task agents in business: End‑to‑end agents can plan, call tools, and complete tasks such as drafting contracts, generating code, and running simple workflows with minimal oversight.cloud.google+1

- Cross‑domain copilots: Enterprise copilots (e.g., Google’s Gemini‑based Workspace assistants) summarize mail, generate documents, analyze sheets, and answer questions over internal knowledge, acting as a general knowledge worker assistant within one organization.cloud.google

These systems show broader versatility than classic narrow AI but still lack robust, human‑level general understanding and autonomy.scientificamerican+1

Examples often cited as “AGI use cases”

- Healthcare decision support: Systems like IBM’s Watson Health analyze patient records and large medical literature corpora to suggest treatment options and support oncology decisions, sometimes cited as early AGI‑style reasoning in a specialized domain.ibm+1

- Industrial optimization and predictive maintenance: Large AI systems monitor sensor data, detect anomalies, and optimize complex production lines or logistics networks, adjusting schedules and predicting failures in near real time.ibm+1

These fit “powerful domain‑general within a vertical” more than “fully general intelligence.”

Multimodal “proto‑AGI” applications

- Customer service and sales: Multimodal models that understand text, voice, and sometimes images are used for richer customer support, sentiment‑aware responses, and context‑aware recommendations.weforum+2

- Knowledge and research assistance: Large models are used for legal analysis, financial modeling aid, and code generation, giving human experts faster access to cross‑domain information and draft reasoning.sidetool+1

Some commentators argue these systems are “chasing and occasionally catching” AGI capabilities in narrow slices, but they are not yet considered true AGI by most researchers.techrevolt+2

How to interpret “AGI in the real world” today

- In research and journalism, “AGI use cases” usually mean: “Here’s what AGI would do in healthcare, manufacturing, logistics, etc.” rather than deployments of a confirmed AGI.kanerika+1

- In industry and marketing, the term often labels very capable general‑purpose assistants or agents built on frontier generative models that are still narrow compared with human general intelligence.sidetool+2

If you want, the next step can be mapping specific “AGI‑like” patterns (tool‑using agents, long‑horizon planners, cross‑modal perception) to concrete systems you’re likely to encounter in enterprise or developer workflows.

What is the progress to ASI – how near are we?

Artificial superintelligence (ASI) does not exist today, and expert views on when or whether it will emerge vary enormously, from “possibly this century” to “not with current approaches, if ever.” The only honest summary is that progress is rapid toward stronger AI systems, but ASI remains speculative and timeline estimates are highly uncertain.theaireport+2

Where we are now

- Current frontier models show impressive gains in coding, reasoning benchmarks, and multimodal tasks, and some can already write and improve their own software in constrained settings.scientificamerican+1

- However, these systems still fail in robust general reasoning, long‑horizon planning, and reliable self‑improvement—capabilities most definitions of AGI or ASI would require.hai.stanford+1

So the field is in a phase of “advanced but brittle” systems, not in sight of true superintelligence.

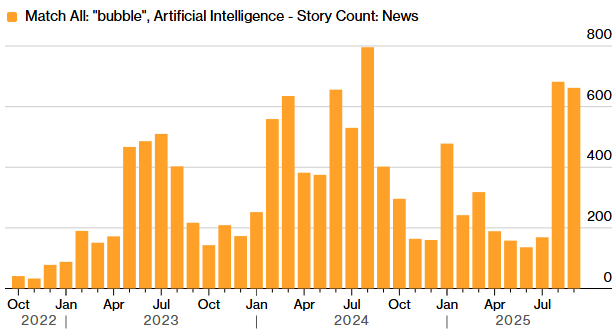

Expert timelines and disagreement

- Recent expert surveys put the median 50% probability for AGI sometime between roughly 2040 and 2061, though a vocal minority predicts much earlier dates.forbes+1

- Some commentators then assume a relatively fast transition from AGI to ASI, with speculative ranges from a few years to several decades; one analysis frames a “useful estimate” of about 10 years from AGI to ASI.theaireport+1

At the same time, many experts (around three‑quarters in one survey) think simply scaling today’s techniques is not enough for AGI, let alone ASI.theaireport

Indicators of movement toward ASI

- Rapid capability scaling: Benchmarks show steep year‑on‑year jumps in areas like multimodal understanding and software engineering, plus large drops in compute cost for a given capability level.hai.stanford+1

- Early self‑improvement: Leading models can already optimize their own code or assist in model design, raising the question of whether this could ever “snowball” into open‑ended self‑improvement.scientificamerican+1

These trends are why some researchers argue we might be seeing the first steps toward systems that could one day underpin superintelligence, even if that point is still far away.scientificamerican

Cautious views and roadmaps

- Researchers like Yann LeCun emphasize that even ambitious roadmaps aiming at “advanced machine intelligence” still expect only animal‑level world understanding (e.g., “cat or rat” level) prototypes within a few years, not human‑ or super‑human minds.eetimes

- Groups branding themselves around “ASI” publish roadmaps that explicitly admit human‑level AGI has not yet been reached and present ASI as a longer‑term goal after major research advances.superintelligence

These more conservative views highlight that achieving robust world models, reasoning, and planning is still an unsolved problem.

How near are we, realistically?

- No ASI today: There are no systems that fit the common definition of intelligence vastly beyond the best human experts across all domains.theaireport+1

- AGI first, ASI later (if at all): Most structured analyses treat ASI as contingent on first achieving AGI, with a further uncertain period—possibly years to decades—before any superintelligent phase.forbes+1

Taken together, a reasonable reading of the evidence is: ASI is not imminent, serious people disagree on whether it is this‑century or ever, and the main near‑term focus is on managing rapidly strengthening but still fallible AI systems rather than on a concrete ASI arrival date.hai.stanford+2